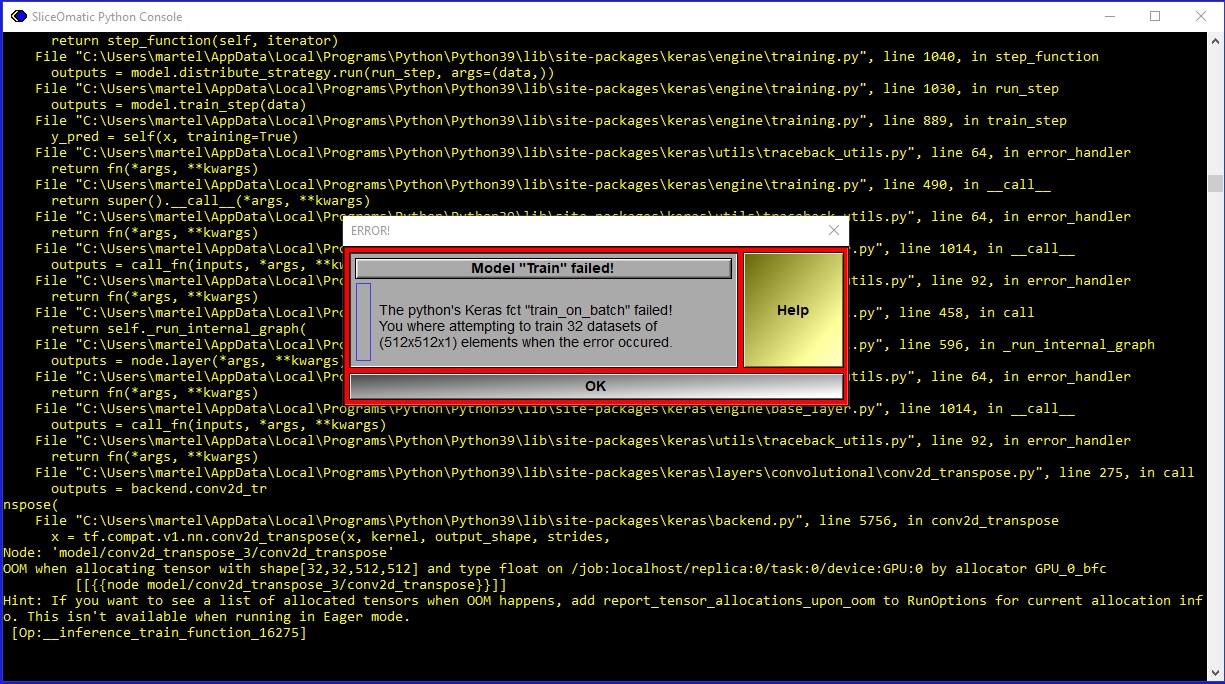

AI training memory problems

|

When training AI 2D and 3D models, the training may crash if you do not have enough memory available:

|

|

|

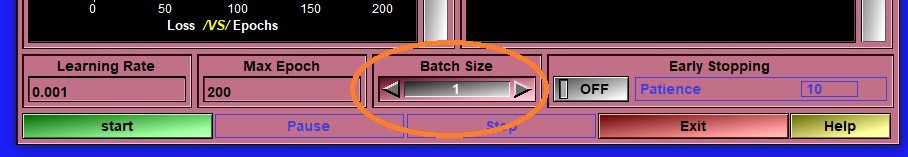

In the 2D mode, the AI can train on a group of images at a time ("Batch Size"). The only advantage provided by training more than one image at a time is speed. It does not affect the precision of the trained model, it just mnake the training a little faster. So, if you do not have enough memory to train your model, you can start by reducing the batch size. You can safely reduce it to "1". |

|

|

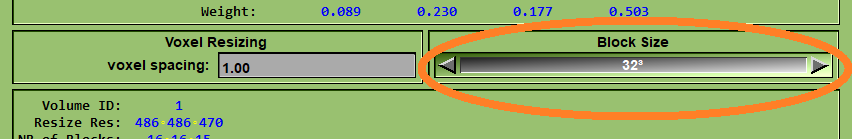

The smallest value for the "Batch Size" is "1", in which case, each image is treated individually. This mean that you need at least enough memory for the AI to process one image. The amount of memory necessary to process one image is dependant on the size of the image, the number of classes and the AI model used. Since we can not easily reduce the size of the images, and you probably do not want to reduce the number of classes, if you do not have enough memory to process one image, the only solutions you have left are changing the model to one that use less parameters (such as U-Net Light) or obtain more memory for your computer. In the 3D mode, you have a little more flexibility. We probably do not have enough memory to train the AI on a complete volume at a time, so we split the volume in sub-groups of fixed size. The size of these groups is set in the "Config\PreProc" page. A group of 64 will create sub volumes of 64x64x64 voxels each that will use the same amount of memory as a 512x512 image. |

|